The trap of testing simple orchestration

Orchestration code organizes calls to other components (dependencies) into a certain process. Such pieces of code are sometimes called coordinators or controllers. Orchestration code is usually simple. There’s no (or almost no) conditional logic, or algorithmic complexity. Its main job is to delegate the work to appropriate dependencies in a specific order. Let’s have an example to better illustrate that:

class Orchestrator {

// ...

// (injected) dependencies

private final Service service; // business logic

private final Mapper mapper; // field-to-field mapping

private final Repository repository; // glue code

public ImportantObject orchestrate(ImportantDto dto) {

ImportantObject important = mapper.toImportantObject(dto);

ImportantObject processed = service.process(important);

return repository.save(processed);

}

}

Separating orchestration from the actual “heavy” work is a good thing. This way they can be tested independently. The resulting code units are smaller and thus more testable. Yet, orchestration code itself rarely benefits from unit testing. Most of the time, keeping it covered by the integration tests is more than enough.

But most of the time is not the same as always. Not every orchestration code is that simple. Sometimes we can’t easily eliminate all signs of complexity. The amazing Unit Testing Principles, Practices, and Patterns book spends a whole chapter describing techniques that could help with that.

Let’s have a look at different flavors of orchestration and discuss how they could be tested.

Orchestration is different

The aforementioned Unit Testing book suggests a clever way of thinking about the code complexity:

Think of the business logic and orchestration responsibilities in terms of code depth versus code width. Your code can be either deep (complex or important) or wide (work with many collaborators), but never both.

Orchestration code should be wide, but not deep. Its main job is to bring multiple dependencies into one place. Complex business logic should be located elsewhere, deep inside the collaborators.

A high number of dependencies makes unit testing cumbersome. No matter what technique we choose, all these collaborators have to be provided somehow. This issue is not specific to the orchestration. The wider the code, the harder testing in isolation becomes. That’s one of the reasons why I don’t rely only on the unit testing.

I believe that every important flow of the system (at least happy paths) should be covered by at least one integration test. Such tests play an important role, as they verify that the system as a whole works as expected. In the case of the wide code, the existing test infrastructure provides all the dependencies for us. Integration tests can “touch” more code than unit tests. For parts not deserving their own unit tests, being touched by the integration tests is usually more than enough. A careful combination of unit and integration testing is what gives me high confidence in the systems I build.

The truth is: not every piece of code deserves its dedicated test! This includes things too simple to break, some configuration objects, and many kinds of glue code. Simple orchestration also falls into that category.

Orchestration code is required for the application to do the work it’s expected to do. It connects the dots by forming a certain ordered process. Corresponding tests should just provide proof that it works. A single integration test could give us that proof for many orchestration parts at once. At the same time, it provides good resistance to refactoring, as it’s not tightly coupled to the implementation details. Therefore, it’s usually enough to test simple orchestration briefly with the integration tests.

Chasing the mocking unicorn

However, some developers still believe, that if there are no dedicated tests for a certain class/file, then it’s not really tested. Before even thinking about the needs, they automatically create new test classes. Armed with powerful mocking frameworks, they end up with tests similar to the one below:

// warning: don't try this at home (at work neither)

class OrchestratorTest {

@Test

void orchestratesCorrectly() {

var input = new ImportantDto("...");

Validator validator = mock(Validator.class);

Mapper mapper = mock(Mapper.class);

Repository repository = mock(Repository.class);

when(validator.validate(eq(input))).thenReturn(true);

ImportantObject mapped = new ImportantObject("...");

when(mapper.toImportantObject(input)).thenReturn(mapped);

when(repository.save(mapped)).thenReturn(mapped);

var orchestrator = new Orchestrator(validator, mapper, repository);

// everything finally set up, time to "test"!

var result = orchestrator.orchestrate(input);

assertThat(result).isEqualTo(mapped);

verify(service.process(eq(input)));

}

}

Such a test provides 100% test coverage, but also 0% of additional confidence. It actually clones the tested

implementation using a bit different API. It has almost no resistance to refactoring, as it’s focused on the

implementation details rather than the observable behavior. In fact, the only thing it aims to check is the

compatibility of the types accepted and returned from the method it calls. The problem is, with static typing, the

compiler is already doing that for us! Let’s not even start the discussion of how much concrete verify calls put into

our shoes…

July 23, 2022There's room for every tool - test doubles are not an exception. Sure, it's possible to end up with fragile and pretty useless tests. But mocks can be also really useful - e.g. when verifying communication with external systems.

Mockito is great when it's used appropriately.July 23, 2022

Writing valuable tests is an important skill as much as avoiding adding useless ones. It’s not the number of tests or test coverage value what matters, but the confidence the test suite gives you when introducing changes. So don’t add new test classes recklessly. Take a moment to think what kind of code you just wrote. Identify the behavior that needs verification. Check if any of the existing integration tests already giving us the proof that it works. Another unit test - especially using mocks - may not be what you need.

The one small ‘if’

Ending this article here will be cheating. In real life, we usually don’t have only the if-less orchestration code.

Even in carefully structured codebases, we often end up with a single branching inside. Usually, it’s about some kind

of validation logic to be executed:

ImportantObject obj = mapper.map(dto);

if (!validator.isValid(obj)) {

// TODO: throw an exception, prepare error message, etc.

}

ImportantObject processed = service.process(obj);

return repository.save(processed);

How to test such a code? Of course, it depends.

First, let’s notice that the actual validation logic is encapsulated within the validator dependency. Alternatively,

this could be a method of the domain object itself. The orchestration responsibility should end on

calling the validation logic at the right moment. Exhaustive testing of the validation logic probably belongs

somewhere else.

Then, we have to understand the domain significance of such a check. If it’s a part of an important path in our system, it probably deserves an integration test. Such validations usually have observable consequences of specific HTTP return codes or domain object state changes. That’s why testing them in isolation would not prove the correctness of the observable behavior.

Yet, with multiple or conditional validations in place a dedicated unit test might be a good idea. Such cases may no longer qualify as a “simple orchestration”.

It can be the business logic too!

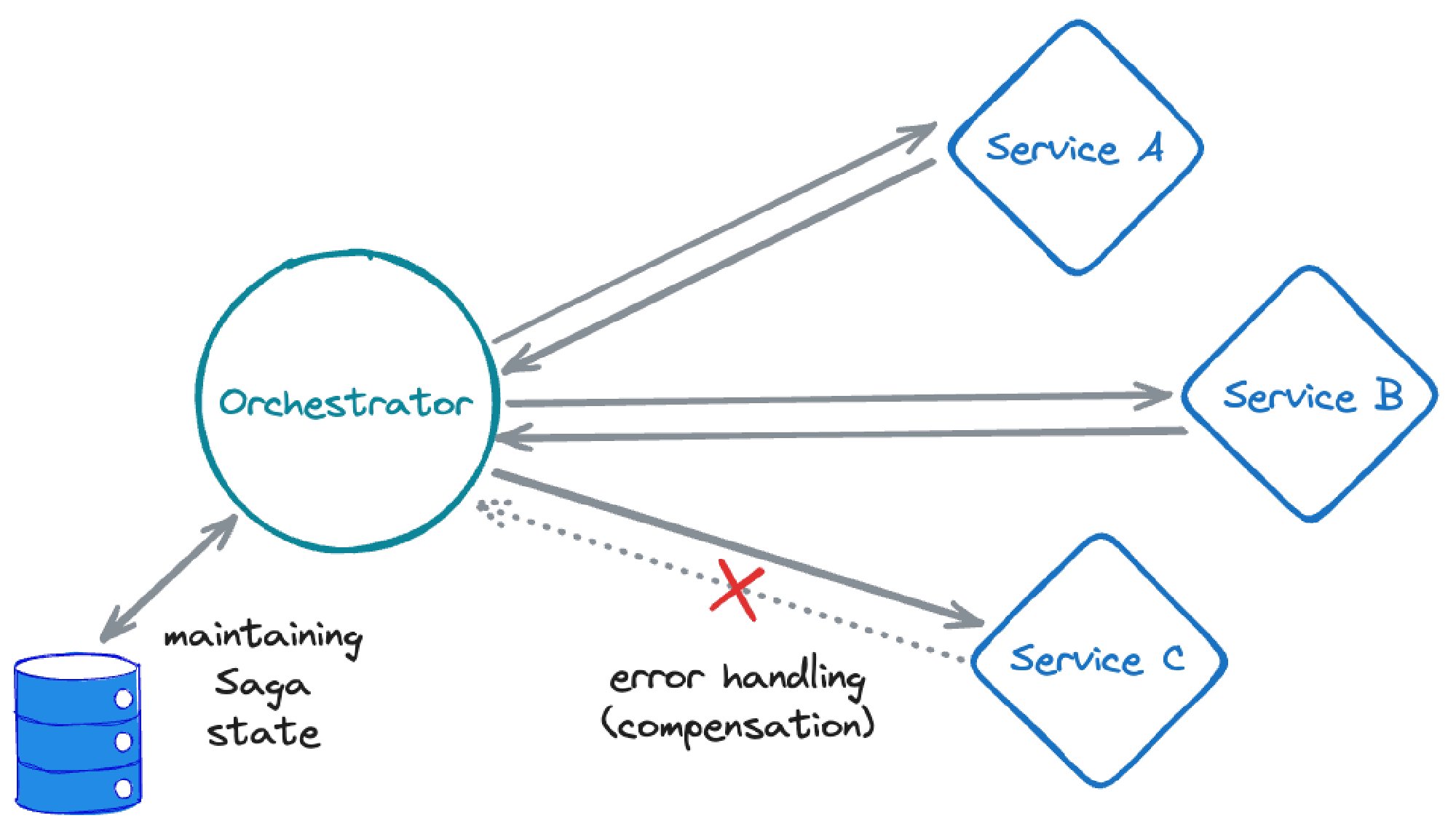

Certain orchestrators are not only complex but also tricky to test properly. Some of them should be considered as actual business logic. The orchestration flavor of the Saga pattern is a perfect example. Saga orchestrator has to face many additional challenges from reliability, to error handling, and compensation logic. This could be complex enough to become a separate service!

Complex orchestration usually requires a more sophisticated testing strategy. Using mocks can be the only reasonable way of simulating particular issues or testing compensation logic exhaustively. Doing the same with an integration test might feel almost impossible. The conditional logic within the saga orchestrator could benefit from unit testing. Finally, it deserves some integration tests proving that the business process works as expected.

Summary

Simple orchestration rarely benefits from unit testing. In most scenarios, having an integration test touching that code should be enough. Not every piece of code will benefit from a dedicated test.

Eliminating complexity from the orchestration logic is not always possible. Yet, such a complexity doesn’t have to influence our testing approach. Complex orchestrators like the one from the Saga pattern are a different story, though.